Voice-Driven CRMs

In 2025, voice remains the most natural, emotionally rich, and trusted channel for customer interactions—73% of customers still prefer speaking to a human (or something indistinguishable) when issues become complex. Yet legacy CRM systems force agents into silent typing marathons while customers wait on hold.

The revolution is no longer coming—it is here. Voice-driven CRMs using modern conversational AI let customers talk naturally while the system understands what they mean, updates records, starts workflows, directs escalations, and replies instantly—all without the agent having to step away from the conversation.

This system is not another chatbot layer bolted onto an old CRM. The outcome is the complete rearchitecture of customer support around the human voice.

What Exactly Is a Voice-Driven CRM?

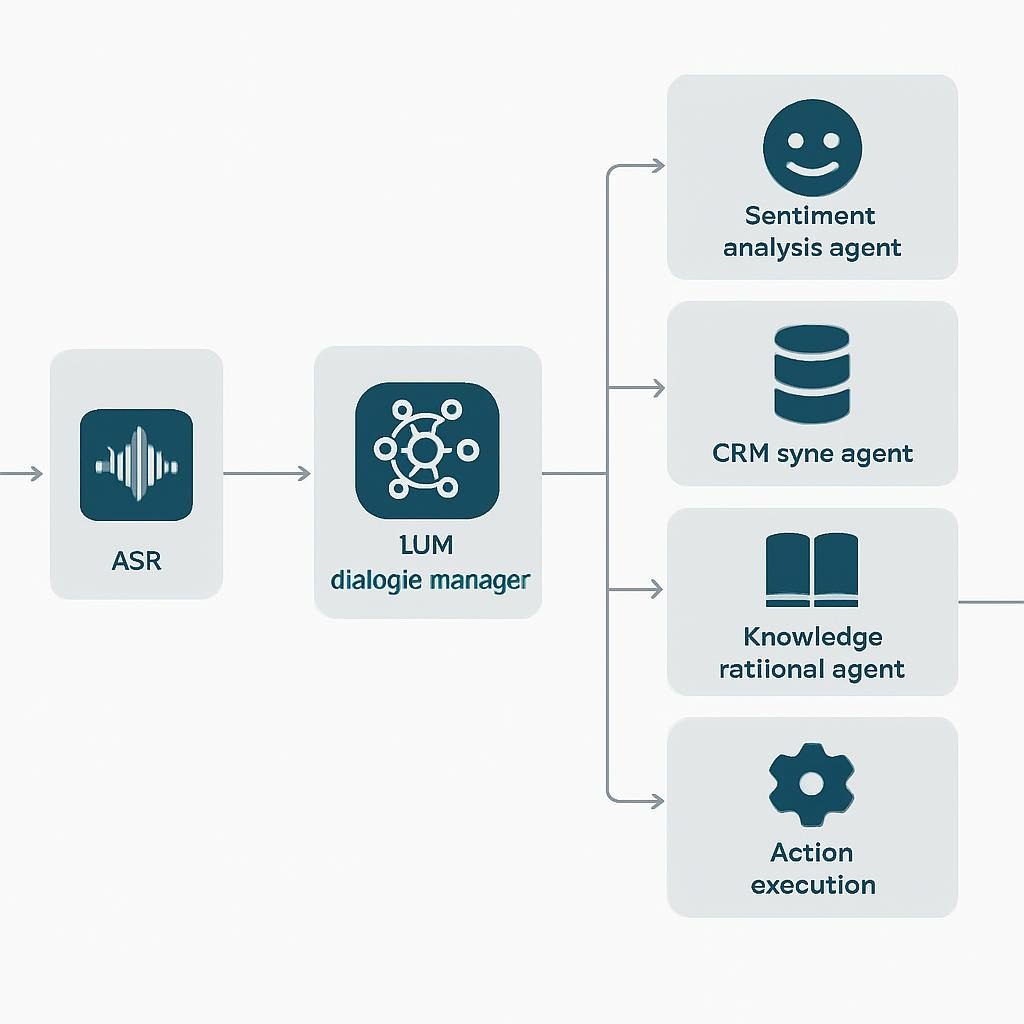

A voice-driven CRM is a platform for managing customer relationships where the primary interface for both customers and agents is spoken natural language, processed by large language models, automatic speech recognition (ASR), natural language understanding (NLU), dialogue management, and text-to-speech (TTS)—all tightly integrated with the CRM database, knowledge base, and business logic.

Unlike traditional voice bots that follow rigid scripts or IVR trees (which 51% of customers abandon), modern voice-driven systems maintain context across turns, handle interruptions, detect emotion, switch topics gracefully, and take autonomous actions such as:

- Updating opportunity stages

- Scheduling follow-ups

- Issuing refunds

- Orchestrating microservices

The Technical Foundation: How Today’s Systems Achieve Human-Parity Conversations

State-of-the-art voice-driven CRMs in late 2025 rely on five core breakthroughs:

- End-to-end neural ASR with <300 ms latency (Deepgram, AssemblyAI, NVIDIA Riva)

- Large reasoning models have been fine-tuned specifically for enterprise dialogue, including GPT-4o, Claude 3.5, Grok-3, and Llama-3.1-405B.

- Streaming voice interfaces that enable interruption handling and turn-taking (Retell AI, Vapi, Bland AI)

- Multi-agent orchestration frameworks where specialized agents handle subtasks in parallel (CrewAI, AutoGen, LangGraph)

- On-device or edge inference via WebAssembly (WASM) and quantized models for privacy-critical industries

The result? Systems can pass Turing tests in specific areas, reach over 98% accuracy in understanding intentions, and sound just like the best human agents.

Proven Impact: Metrics That Matter in 2025

| Metric | Traditional Support | Chat-Only AI | Voice-Driven CRM (2025) |

|---|---|---|---|

| Average Handle Time | 8–12 minutes | 4–6 minutes | 2.5–4 minutes |

| First-Contact Resolution | 65–75% | 78–85% | 91–96% |

| CSAT Score | 78–84 | 85–89 | 93–97 |

| Cost per Contact | $8–15 | $2–4 | $0.40–$1.20 |

| Agent Utilization Rate | 62% | 75% | 92% |

Sources: Gartner 2025 Customer Service Hype Cycle, Zendesk CX Trends 2025, internal benchmarks from Sierra, Decagon, and Bland AI deployments

Real-world examples:

- Chime (with Decagon Voice) achieved 94% containment on voice inbound with brand-perfect tone

- IPSY (with Ada) increased CSAT by 41% in four months after replacing IVR with AI voice agents

- A top-3 U.S. airline reduced refund processing time from 7 days to 90 seconds via voice-driven CRM actions

The Multi-Agent Future Already Shipping in 2025

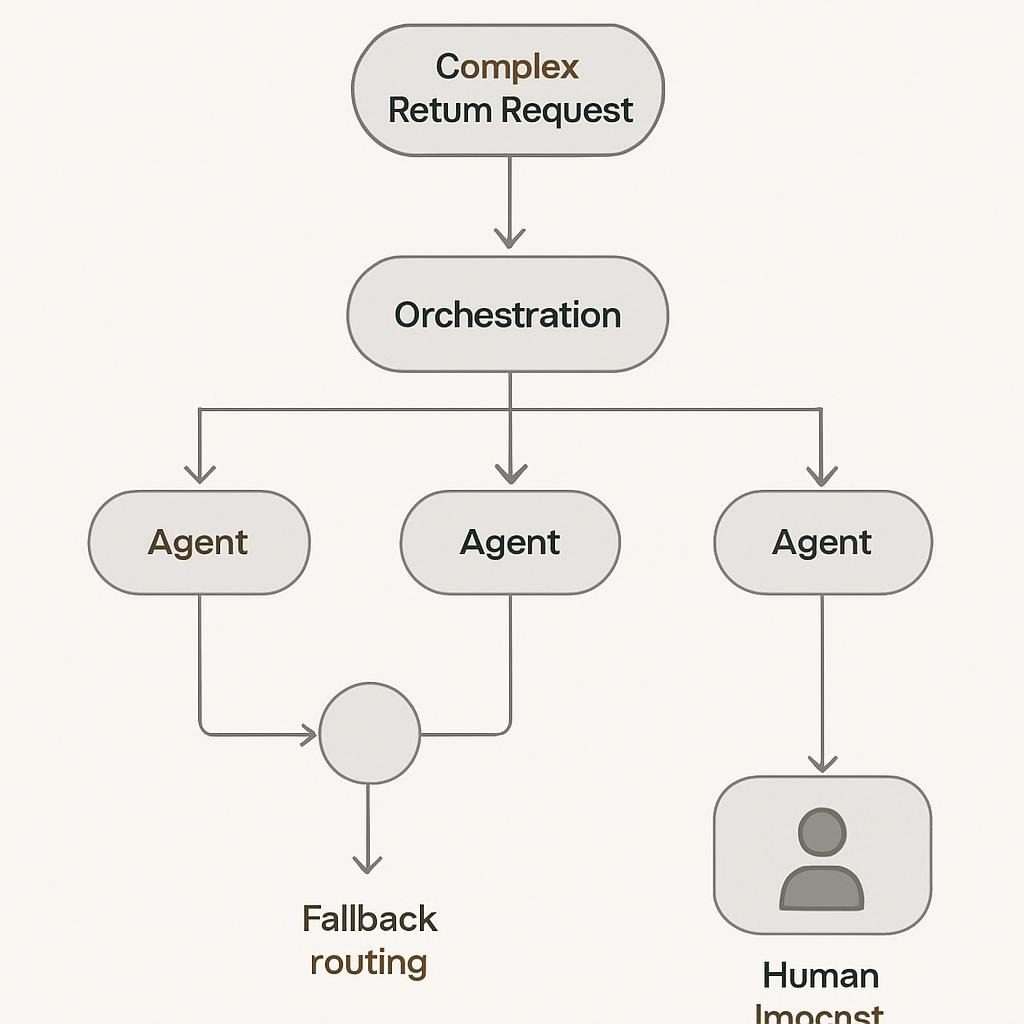

Leading airlines no longer use monolithic agents; instead, they deploy orchestrators that create specialized sub-agents:

- Sentiment & escalation agent

- CRM write agent (atomic updates with audit trails)

- Compliance & redaction agent

- Knowledge retrieval agent (RAG over internal docs)

- Post-call summarization & follow-up agent

This architecture delivers 3–5× higher containment rates on complex, high-value interactions than single-agent systems.

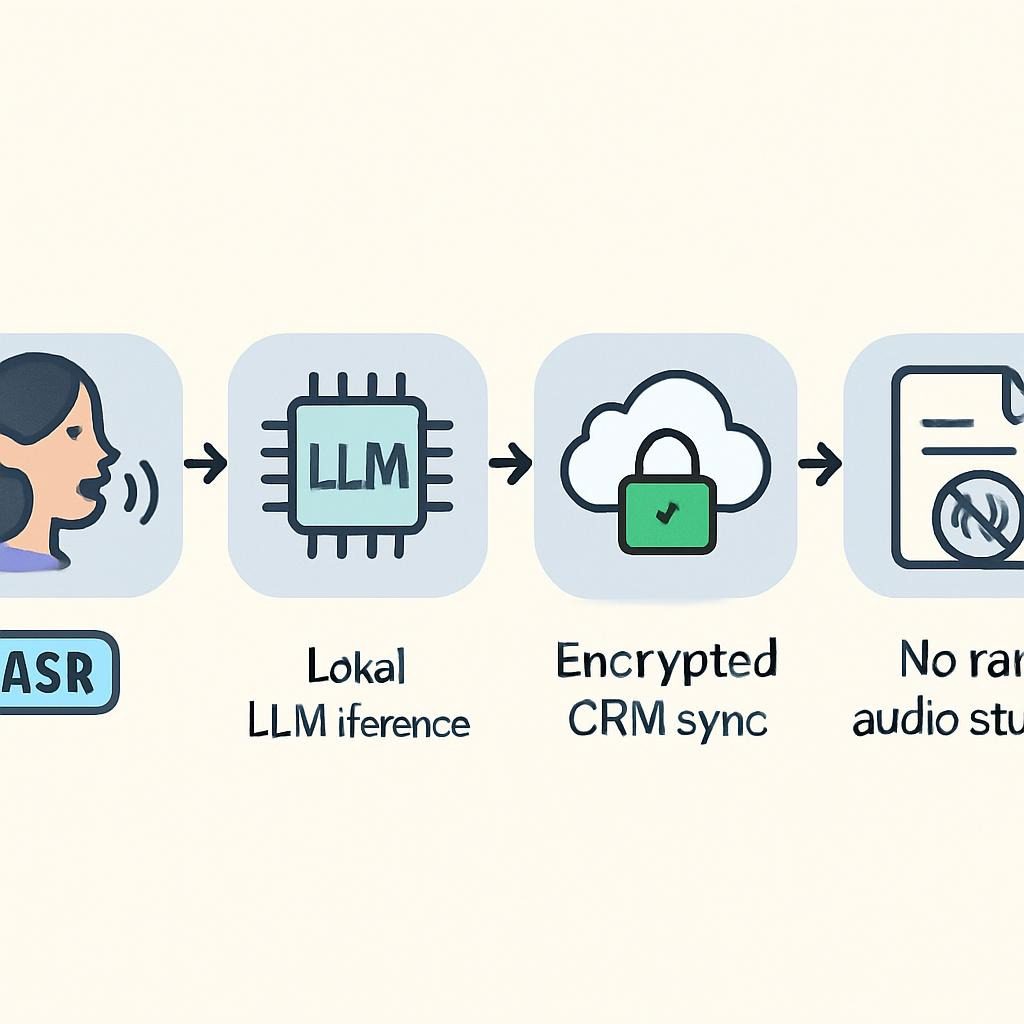

Privacy-First and Edge-Driven: The Next Frontier

This procedure Regulated industries (healthcare, finance, and government) are moving processing on-device or to private edge networks:

- WebAssembly-compiled 7B–70B models running in browser or mobile app (via Ollama + WASM, Transformers.js)

- Federated learning across enterprise devices without raw audio leaving premises

- Zero-knowledge proof verification of policy adherence

~350 ms. For example, a big European bank used Grok-3-70B quantized to 4 bits on NVIDIA edge appliances to fully deploy voice agents on-premises in Q4 2025. This resulted in response times of less than 500 milliseconds with no cloud audio transit.

Challenges and Enterprise-Grade Solutions

| Challenge | 2023–2024 Reality | 2025 Enterprise Solution |

|---|---|---|

| Accent & noise handling | 72% accuracy on Indian English | 97%+ with custom acoustic models and noise suppression |

| Hallucinations & compliance | Frequent policy violations | Retrieval-augmented generation + compliance agents + human-in-the-loop review |

| CRM data accuracy | 40% error rate in updates | Dual-write verification + change confirmation loops |

| Integration complexity | 9–18 months | No-code voice gateways + pre-built connectors (30–90 day deployments) |

The Platforms Leading the Voice-Driven Revolution (Neutral 2025 Assessment)

| Platform | Best For | Voice Latency | Multi-Agent Support | On-Premise/Edge | 2025 Pricing Tier |

|---|---|---|---|---|---|

| Sierra | High-brand-experience enterprises | ~400 ms | Native | Hybrid | Custom (starts at ~$150k/yr) |

| Decagon | Financial services & complex workflows | ~350ms | Advanced | Private cloud | $1.20 per resolved conversation |

| Bland AI | Outbound + inbound scale | ~300 ms | Via API | Full on-prem | $0.09/minute |

| Retell AI | Developer-first custom agents | ~280 ms | Strong | Hybrid | $0.07/minute + LLM costs |

| Cognigy | Global enterprises (80+ languages) | ~450 ms | Native | On-prem | Custom enterprise |

| Voiceflow + Grok-3 | Rapid prototyping & SMB | ~500 ms | Via LangGraph | Cloud | $0.05–0.15/minute |

Conclusion: The End of Typing-Based Support

By the end of 2026, typing customer notes will feel as archaic as sending business letters by fax in 2010.

The companies winning today are those treating voice not as another channel, but as the central nervous system of their entire customer relationship architecture.

Voice-driven CRMs are not incrementally better customer support—they are an entirely new operating system for customer relationships.

The transformation is no longer optional. It is inevitable.

20 Primary Keywords

voice-driven CRM, conversational AI CRM, voice AI customer support, AI voice agents, voice to CRM, multi-agent voice AI, edge voice AI, privacy-first voice CRM, voice AI transformation, conversational AI customer service, AI-powered CRM 2025, voice bot CRM integration, real-time voice CRM, enterprise voice AI, voice AI case studies, Bland AI alternatives, Sierra voice agents, Decagon voice, Retell AI CRM, voice-driven customer experience